LLM optimization matters because AI tools like ChatGPT and Google’s AI Overviews now answer questions directly, eliminating the need for clicks. Nearly 60% of Google searches end without a click, a figure that has climbed from about 54% in 2017 to over 62% in 2021. As a result, only about 360 of every 1,000 searches send visitors to non‑Google sites.

Mobile behavior highlights the shift, with just 17.3% of mobile searches leading to a click. Notably, factual or weather queries see zero-click rates of 77% and 85%.

LLM Optimization (LLMO) bridges this transition by structuring your content and establishing authority, ensuring machines surface it in generated answers. nDash helps brands develop data‑rich pages that large language models can find, understand, and cite.

What is LLM Optimization and Why It Matters

LLMO is the practice of structuring your content so that LLMs can find, understand, and cite it in AI-generated answers. It focuses on ensuring these models recognize, cite, or reference your content when generating answers. Examples of LLMs affected by this strategy include ChatGPT, Google’s AI Overviews (SGE), Perplexity, and Claude.

As AI responses replace clicks, LLMO becomes the next logical step after SEO. Instead of ranking in link lists, your goal is to be the answer. James McMinn Jr., Senior Digital Strategist at Matchbox Design Group, discussed this in one of his latest newsletter updates. He explains, “We’re not optimizing for search engines anymore—we’re optimizing for answer engines. And if you’re not training the bots to know your name, someone else is.”

In a zero‑click world, traditional SEO still matters, but it’s not enough. (More on zero-click and its importance in a minute.) Optimizing for LLMs now positions your brand to appear in AI-powered answers, securing a share of voice where search engines once drove traffic.

👉 Click here to explore how generative AI is reshaping modern SEO: Is AI Reshaping SEO? Potential Disruptions in Traditional Practices – nDash.com

From SEO to LLMO: What’s Changed

User behavior has shifted dramatically. Instead of clicking through search result links, many now ask AI platforms directly. Tools like ChatGPT, Perplexity, and Google’s Search Generative Experience (SGE) provide complete responses inline, without requiring a link. Unlike SEO, which focuses on keywords, backlinks, and organic listing positions, LLMO prioritizes being understood and citable by AI systems.

SEO targets ranking on Google SERPs to attract users to click through to a website. LLMO, in contrast, focuses on ensuring a brand is visible within the AI-generated answer itself. This visibility is crucial for placement in both conversational chatbots and AI Overviews that appear at the top of search pages.

Why LLM Visibility Is the New Traffic Driver

Rand Fishkin, SparkToro’s CEO and co-founder, published a zero-click search study. In it, data reveals that roughly 60% of Google searches in the U.S. now end without a click, a number mainly driven by AI overviews and zero-click results. Studies show that on pages with AI summaries, clicks to external sources fall to as low as 1%. If AI platforms aren’t citing your brand, you’re simply invisible to these users.

Brands that fail to appear in AI-generated answers lose access to high-quality traffic. A recent Semrush report indicates that visitors arriving through AI search convert 4.4 times more often than those from traditional organic search. Optimizing for LLM visibility is now a key factor in attracting conversion-ready audiences.

Step 1: Roll Out the AI Welcome Mat

Step one involves making your website accessible to AI crawlers, enabling them to read and cite your content in their answers.

Cloudflare says bots now make up about 30% of all web traffic, with AI and search crawler activity climbing 18% year over year. In that time, GPTBot’s share jumped from 5% to 30%, while ByteSpider dropped from 42% to just 7%, a sign of how fast LLM crawlers are reshaping the landscape.

Even so, many sites are still shutting these bots out. Ahrefs found that about 6% of robots.txt files block GPTBot, and that figure climbs past 7% when you count subdomains. The blocking of ClaudeBot is up by nearly a third. This hesitation is happening even though AI sources currently send only 0.1% of referral traffic, compared with 44% from search, 42% from direct visits, and 13% from social. Opening your site to AI crawlers focuses not on today’s traffic but on the direction the web is heading.

Block rates also depend on the industry. Arts and entertainment sites block bots nearly half the time, and law or government sites block them about 42% of the time. These sectors, in particular, may need to rethink their approach if they want to stay visible in an AI‑driven future.

Your brand’s visibility in AI-generated answers starts with one critical factor: accessibility. If LLM crawlers like GPTBot and ClaudeBot can’t access your site, they can’t learn from or reference your content.

Unlike traditional search engines, which rely on decades-old crawling patterns, these AI bots require permission to read and process pages. Ensuring your site is LLM-friendly is the first step to being included in AI-driven conversations.

👉 Click here to learn how to make your site AI‑accessible with schema and bots: Optimizing Your Website for SEO and AI with AI-Friendly Schema: A Guide for Digital Marketers – nDash.com

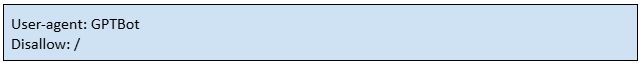

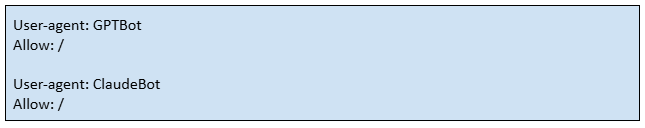

Don’t Block GPTBot or ClaudeBot in robots.txt

Your robots.txt file determines which bots can access your site. While blocking aggressive crawlers is standard, blocking AI bots like GPTBot or ClaudeBot cuts you off from AI-generated answers.

Quick check: Review your robots.txt for disallow rules targeting these bots, update permissions if needed, and confirm changes with a crawl test.

Here’s how to make sure you’re not shutting the door:

- Locate your robots.txt file: You can usually find it at https://yourdomain.com/robots.txt. Open it in a browser to review its contents.

- Look for disallow rules: Scan for lines that block GPTBot or ClaudeBot. A blocked crawler may look like this:

- Allow AI crawlers to access your content: If blocked, update your file to allow them explicitly. Here’s an example:

- Test your changes: Use tools like cURL or online robots.txt checkers to confirm the bots can crawl your site successfully.

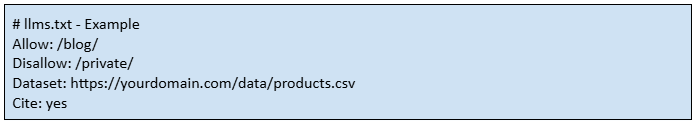

Add an llms.txt File

The llms.txt file is a new, emerging standard that gives LLMs explicit instructions on how to interact with your site. Think of it as an upgrade to robots.txt, but designed specifically for LLMs. While robots.txt tells bots what they can or cannot crawl, llms.txt goes further, helping AI understand which content is authoritative, where structured data lives, and what assets are safe to cite.

Why it matters:

- Guides AI models directly on what to use and where to find it.

- Boosts citation accuracy, improving the likelihood that your brand is credited.

- Protects sensitive data by clearly specifying what is off-limits.

How to create an llms.txt file:

- Create a plain text file named llms.txt.

- Include clear instructions, such as data locations and citation policies.

- Place it at your domain root, like https://yourdomain.com/llms.txt.

Example structure:

Best Practices

- Keep the file updated as your site evolves.

- Include links to structured data (CSV, JSON) to facilitate easy ingestion.

- Clearly state your citation preferences to encourage proper attribution.

Adding llms.txt is a proactive step that helps LLMs interpret your content accurately and cite it consistently in AI-generated answers.

Step 2: Structure Content for Instant AI Answers

Step two is all about structuring your content so AI models can pull out answers without digging. SurferSEO’s review of 400,000 AI overviews found the typical AI response is only 157 words, and nearly all stay under 328.

Unlike traditional search engines, LLMs don’t reward keyword stuffing or long-winded intros. They look for content that’s clean, clear, and easy to lift into a response. Avoid burying your key points. Otherwise, the model may miss them altogether.

Another insight: The exact search query shows up in only about 5% of AI answers. Models paraphrase that they’re not copying and pasting. That’s why focusing on clarity and structure matters more than chasing exact-match keywords.

👉 Click here to read how E-E-A-T and authority-building improve AI visibility: Content Marketing Strategies: Prioritizing Quality Over Quantity in the AI Age – nDash.com

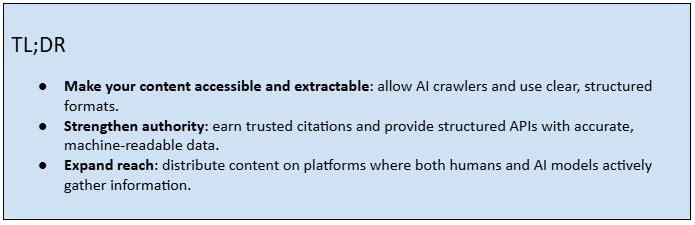

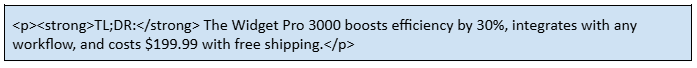

Lead With a TL;DR

A TL;DR (Too Long; Didn’t Read) serves as a strategic signal for AI, not just a courtesy for readers. LLMs scan content for direct, extractable answers. A concise summary at the top of your page guides them to the information they need to cite. When done right, a TL;DR increases the likelihood that your content is referenced or quoted in AI outputs.

Why a TL;DR works for AI and humans:

- Provides instant clarity by answering the query right away.

- Signals authority LLMs treat concise, confident statements as reliable.

- Improves usability for human visitors who prefer skimmable content.

How to craft an effective TL;DR:

- Keep it under 50 words, short, sharp, and on-topic.

- Directly answer the search intent, state the solution, not the background.

- Use bold formatting or headings to make it visually prominent.

Example format for a product page:

Best Practices

- Place the TL;DR at the very top of the page.

- Refresh it when updating your content to keep responses accurate.

- Avoid filler; focus on the core answer that LLMs (and readers) are looking for.

Structured, concise formatting doesn’t just make your content easier for AI to parse; it also opens the door to more citations. Semrush found that about 90% of the pages ChatGPT cites rank below the top 20 in traditional search. In other words, you don’t need a first-page ranking to get noticed by AI.

Google’s AI overviews back this up, citing Quora more than any other domain, with Reddit close behind.

The takeaway is simple: Clear, well‑organized content, shared across multiple platforms, can earn citations even if it’s not sitting at the top of Google’s results.

Think of the TL;DR as your featured snippet for AI, the first thing machines and users alike should see.

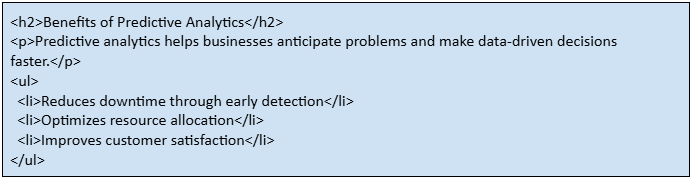

Prioritize Clean Formatting

Clean, structured formatting is critical for making your content easy to parse, both for AI crawlers and human readers. LLMs favor content that organizes information clearly, allows easy scanning, and follows a logical structure. When your layout follows clear patterns, models can extract information more accurately and confidently include your content in answers.

Why clean formatting matters:

- Improves machine readability. LLMs detect headings, lists, and patterns faster.

- Enhances user experience, keeping visitors engaged with easy-to-skim content.

- Boosts citation potential, since AI models favor content they can lift without confusion.

How to format content for AI visibility:

- Use H2 and H3 headings to structure topics logically.

- Keep paragraphs short, ideally two to three sentences, for improved readability.

- Use bullet points or numbered lists to present steps or data.

- Include tables where structured comparisons add value.

Example structure for an article section:

Best Practices

- Avoid long blocks of text. AI models struggle with unstructured content.

- Maintain consistent heading hierarchy (H2 > H3 > H4).

- Use descriptive headings that signal the content that follows.

With clear formatting, your pages become machine-friendly and user-friendly, increasing your chances of being surfaced in AI-generated answers.

Step 3: Build Trust Signals LLMs Recognize

Step 3 focuses on building machine-verifiable authority so LLMs treat your content as trustworthy and worth citing. Research drives the point home: GPT‑3.5 fabricated more than half of its citations, and nearly half of the rest were wrong. Even GPT‑4 isn’t immune; it fabricates about 18% of its citations and distorts another 24% of them.

Beyond crawlability, LLMs weigh how often other sources validate your claims, making third-party mentions essential for establishing authority.

To show up in AI-generated answers, your brand needs signals of authority that machines can detect and trust. As John Short, CEO of Compound Growth Marketing, explains, “How people talk about you on other sites will impact how you show up in the LLMs.”

👉 Click here to understand how machine‑readable authority and trust boost AI visibility: Best Practices for Modern Marketers: Content Creation and Navigating Google’s Ranking Factors – nDash.com

Get Featured in Trusted Sources

Visibility in AI-generated answers isn’t just about optimizing your site; it’s about being recognized across the wider web. LLMs assess credibility based on how often authoritative sources reference your content. More citations increase the likelihood of being included in responses.

Why trusted sources matter:

- AI cross-references multiple domains to validate accuracy.

- Mentions from credible sites increase your perceived authority.

- Diverse exposure in newsletters, blogs, and databases boosts discoverability.

Where to focus your efforts:

- Niche industry newsletters: Share original insights or thought leadership pieces.

- Respected publications: Contribute guest articles or expert commentary.

- Top 10 / Best Of roundups: Secure spots on curated lists that LLMs scan for references.

Example outreach target list:

- Industry newsletters (e.g., SaaS Weekly, Marketing Brew)

- High-authority blogs in your sector

- Community-driven knowledge bases (with proper citations)

Best Practices

- Provide data-backed insights that editors and curators want to feature.

- Ensure mentions include correct brand names and links for AI traceability.

- Keep contributions consistent, LLMs reward redundancy across sources.

Establishing a presence on reputable, high-traffic sites strengthens the signals AI models use to determine which brands to feature in answers.

Focus on Third-Party Mentions

For LLMs, repetition across credible sources equals validation. Search engines often weigh backlinks when judging authority. LLMs instead evaluate how often independent platforms reference your brand or content. Multiple mentions across different contexts help models confirm you’re a trusted source worth citing.

Why third-party mentions are critical:

- Redundancy builds trust. AI interprets repeated mentions as factual reinforcement.

- Broader context improves understanding of models linking your brand to multiple topics.

- Diverse references boost authority, making you more likely to appear in AI outputs.

Ways to earn repeat mentions:

- PR strategies: Issue data-driven press releases or expert insights to media outlets.

- Podcast features: Guest appearances expose your expertise to new audiences and AI crawlers.

- Collaborations: Partner with influencers or brands for co-branded reports, studies, or webinars.

- Community engagement: Share helpful commentary in forums and Q&A platforms where AI scrapes discussions.

Example PR outreach checklist:

- Pitch one unique insight to five industry blogs each quarter

- Secure two to three guest podcast appearances annually

- Collaborate on at least one co-branded asset per year

Best Practices

- Target authoritative, topic-relevant outlets for stronger signals.

- Maintain message consistency across appearances so LLMs link mentions back to your expertise.

- Regularly track mentions to identify gaps and opportunities for more coverage.

Each additional independent reference strengthens your authority, giving AI models more reasons to surface your brand. nDash connects brands with expert creators who can generate the authoritative content LLMs recognize.

Once your credibility signals are in place, the next challenge is ensuring that AI systems can still access and use your data. Ensuring accessibility becomes critical as traditional crawling continues to fade away. Authority signals build trust, but AI also needs structured access to your data. The next step is to make the data machine-consumable through APIs.

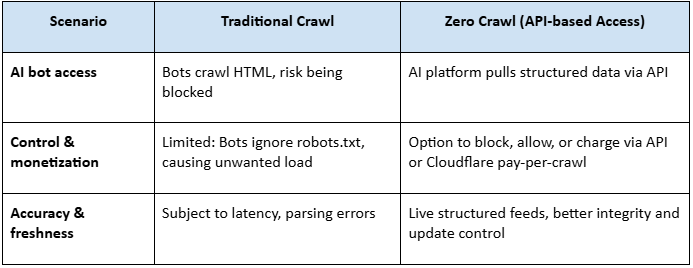

Step 4: Prepare for Zero-Crawl with Structured APIs

Step 4 prepares your content for AI models that pull live, structured data via APIs instead of traditional crawlers.

Zero-crawl means AI platforms bypass traditional web scraping, pulling structured data via APIs. This approach gives brands greater control over how their information is accessed.

It also clarifies who can use the data and under what terms.

Many companies now explore monetization through API gateways, turning access into a revenue stream.

If your brand isn’t ready to supply data through these channels, you risk being left out of AI-powered answers even if your site is otherwise optimized.

👉 Click here to discover strategies for structuring content for AI retrieval (zero-click readiness): Navigating the ‘For Me’ Era: Strategies for AI-Driven Search Success – nDash.com

What “Zero Crawl” Means

Instead of bots like GPTBot scraping pages, AI assistants now pull live, structured data straight from APIs. Machine‑readable formats are everywhere, and schema.org alone appears on more than 45 million domains. Desktop pages are packed with structured data too:

- RDFa (62%)

- Open Graph (59%)

- Twitter Cards (40%)

- JSON‑LD (37%)

- Microdata (25%)

For brands, APIs mean more control over access, usage terms, and even monetization. Postman’s 2024 State of API Report shows 74% of organizations call themselves API‑first, and 62% are already monetizing their APIs. With bots making up nearly 30% of global web traffic and crawler activity climbing 18% year over year, structured, API‑driven access isn’t optional. It’s how your data stays visible in AI‑generated answers.

Why It Matters for Brands

Relying only on crawling is risky because gatekeepers like Cloudflare can block AI traffic, even to optimized sites.

Brands that provide structured API endpoints or establish partnerships ensure inclusion in AI-generated answers. Those that don’t risk being excluded from visibility entirely.

Example Scenarios

Best Practices for Preparing Your Brand

- Evaluate your data APIs: Determine if your content is already accessible through APIs (e.g., product feeds, knowledge bases, news feeds, documentation).

- Consider partnerships: Can major AI platforms, search assistants, or data aggregators access your API? A partnership can boost visibility.

- Explore monetization models: Infrastructure options like Cloudflare’s pay‑per‑crawl let you define pricing or set free access policies based on unique crawlers.

- Document your data schema and endpoints: Well-documented, clearly structured APIs are more likely to be integrated and less likely to get filtered or blocked.

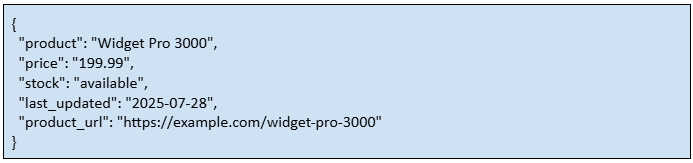

Publish an MCP-Ready Endpoint

AI models are moving away from scraping and toward consuming structured data directly, which makes having a machine‑ready endpoint non‑negotiable. Postman’s latest survey backs this up:

- 74% of companies now call themselves API‑first (up from 66% in 2023)

- Over 62% earn revenue from their APIs

- 67% of teams using collaborative workspaces can spin up an API in under a week

Set up an endpoint that meets standards like the Model Context Protocol (MCP), and AI will pull the latest information straight from your source. It also boosts your chances of being cited and helps lock in your visibility as zero‑crawl becomes the default.

Why an MCP-ready endpoint matters:

- Ensures accuracy: AI retrieves the most up-to-date information on products and services.

- Boosts citation opportunities: Structured APIs make it easier for LLMs to credit your brand.

- Increases visibility: Real-time data integration makes your site a preferred source.

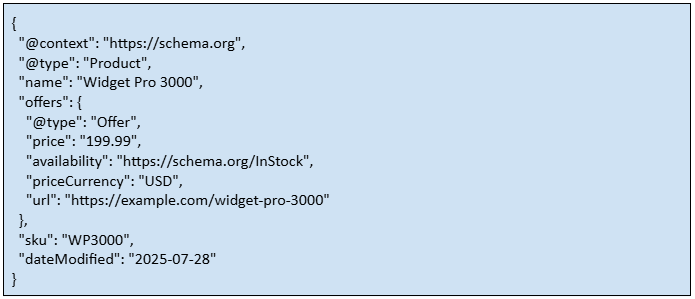

What to include in your endpoint:

- Key product attributes (name, price, availability)

- Timestamps (last updated date for freshness)

- Links to additional data (manuals, documentation, specs)

- Clear structure that machines can parse consistently

Example JSON output for a product API:

Best Practices for Deploying MCp Endpoints

- Host your API on a stable, secure URL.

- Use standardized field names for consistency.

- Update data frequently to maintain freshness.

- Document the endpoint so AI crawlers understand its structure.

Providing an MCP-ready endpoint makes your data machine-accessible, current, and reliable, increasing the likelihood that AI models will use and cite your content in real time.

Think Beyond Static Pages

In the AI-driven search era, static web pages are no longer enough to keep your brand visible. LLMs prioritize information that is current, structured, and easily retrievable. While traditional content pages remain valuable, they need support from data sources that AI can access and trust.

Why static pages fall short:

- Aging content quickly loses relevance, causing AI models to pull outdated information that undermines your brand’s authority.

- Without a machine-readable structure, critical details remain hidden, reducing the chances that AI can extract and present them accurately.

- The absence of real-time updates means users may receive obsolete answers, weakening trust and lowering engagement with your content.

How to move beyond static content:

- Use structured formats, such as JSON, XML, or schema.org markup, for key information.

- Provide APIs or data feeds to supply LLMs with the latest product and pricing details.

- Integrate dynamic elements that update automatically, such as inventory or availability data.

Example schema snippet for product data:

Best Practices for Dynamic, AI-Friendly Data:

- Automate updates so your content reflects real-time changes without manual edits.

- Combine structured data with human-readable pages to serve both audiences.

- Monitor AI outputs to ensure they pull your latest information correctly.

Moving beyond static pages to adopt structured, dynamic formats makes your content more useful to AI models. This approach ensures your brand remains visible in real-time answers.

While APIs help with live answers, evergreen content plays a different role. It must stand out if you want future GPT training datasets to include it. Even though APIs provide real-time visibility, inclusion in training sets depends on whether your evergreen content meets the reference-grade standards that AI models favor.

Step 5: Get Into Future GPT Training Sets

Step 5 involves creating reference-grade content that AI training datasets are more likely to include. There’s no official public estimate from OpenAI on what percentage of all web content becomes training data. However, I did find some OpenAI-based reporting that’s pretty interesting:

- ~ 60% from Common Crawl: based on filtered web crawl content

- ~ 22% from WebText2: links upvoted on Reddit (≥3 upvotes)

- ~ 8% from Books1 / Books2

- ~ 3% from English-language Wikipedia

Together, these sources account for approximately 93% of GPT‑3’s data, with the remainder likely coming from smaller sources or internal feedback loops. To earn a spot, your content needs to be reference-grade, highly structured, well-cited, and authoritative.

Follow a “Reference Page” Recipe

Future GPT training sets favor pages that function as definitive references. Provide verifiable facts, downloadable datasets, and clear sectioning to improve inclusion odds.

Why reference pages matter:

- LLMs prefer authoritative sources that include verifiable data.

- Structured organization makes it easier for AI to parse and extract information.

- Downloadable assets give models raw material they can ingest without misinterpretation.

How to build a training-friendly reference page:

- Start with a compelling statistic to establish authority and relevance.

- Cite credible sources to reinforce trustworthiness.

- Use structured headings (H2, H3) to define sections clearly.

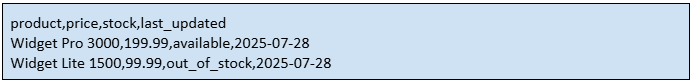

- Include datasets in CSV or JSON format for machine consumption.

- Add metadata (e.g., schema.org) to strengthen machine readability.

Example of a downloadable dataset format:

Best Practices for Reference Pages:

- Refresh statistics and datasets regularly to keep them current.

- Avoid filler, focus on facts, numbers, and citations.

- Provide clear download links to structured data files (e.g., /data/products.json).

Even reference-grade content needs exposure. Distribution across forums and social platforms reinforces the authority signals LLMs use when selecting sources. Following this recipe helps you create content that is credible, well-structured, and ready for AI training. As a result, the likelihood of inclusion in future GPT datasets increases.

As Alisa Scharf, a writer for Seer Interactive, notes, “Training data will continue to be a critical component of LLMs for years to come. But updates to training data will likely come as new frontier models are released… brands who seek to be referenced within the innate training data of an LLM must prepare to wait months or even years to be included in this dataset.”

Keep It Fresh

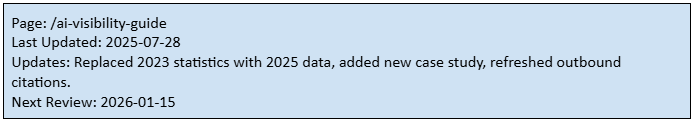

Freshness isn’t just an SEO ranking factor; it’s also a critical signal for LLMs. They prefer recently updated, factually accurate pages over outdated resources.

Scharf states, “We believe you should allow LLMs to scrape your website content. This website content should be accessible to bots, and include factual, descriptive, well‑structured content. Tip: Up‑to‑date content is important. If your content isn’t dated or is over 1 year old, prioritize updates accordingly.”

When AI detects that your content is regularly maintained, it’s more likely to pull and cite it in answers and to include it in future training sets.

Why freshness matters:

- Outdated information can lower your credibility in AI outputs.

- Set up a recurring monitoring process to ensure pages remain current.

- Use analytics dashboards, automated alerts, and scheduled content reviews to identify when updates are necessary and maintain your AI visibility.

- Timely data increases the likelihood of being surfaced in real-time responses.

How to keep your content fresh:

- Use change-detection and content tracking tools to identify outdated pages before they impact visibility.

- Platforms like Screaming Frog, Sitebulb, and SEOmonitor can flag broken links, old stats, and declining traffic, helping you schedule timely updates.

- Refresh case examples to reflect current trends or recent success stories.

- Revise outdated sections instead of letting them stagnate.

- Track content performance using tools like Google Search Console to monitor page freshness.

- Set automated alerts to flag when engagement or rankings decline, prompting timely updates.

Example of an update log you can maintain internally:

Best Practices for Maintaining Freshness

- Establish a content audit schedule (e.g., quarterly reviews).

- Include a “last updated” date on the page to signal recency to both humans and machines.

- Combine minor updates (data points, examples) with major revisions (new sections) as needed.

Consistently maintained pages stay relevant for users over time. They also have a higher chance of being included in AI training sets and live LLM-generated answers.

Freshness ensures your content remains accurate, but distribution ensures it’s seen. After updating, share it across platforms where both AI and humans engage.

Step 6: Distribute Content Where LLMs Lurk

Step 6 focuses on distributing your content across platforms where AI models actively learn and gather signals of authority. AI doesn’t just pull from your website; it learns from the places where users are most active. That’s why distributing your content across multiple platforms isn’t optional; it’s how you stay visible in AI‑generated answers.

- Platforms used by LLMs: Semrush found that Google’s AI Overviews heavily cite platforms like Quora and Reddit. Combine that with data showing 50% of ChatGPT links go to forums, publishers, or academic sources, and it’s clear that community‑driven sites hold real influence over what AI references.

- LLM user tasks: Bain reports that 68% of users rely on LLMs for research, 48% for news and weather, and 42% for shopping recommendations. These numbers hint at where and what type of content is most likely to surface.

- Bot traffic proportion: Bots already make up nearly 30% of global web traffic, and AI crawler traffic is growing 18% year over year. Distributing content on platforms that these bots actively crawl increases your odds of being part of their learning loop.

👉 Click here to learn how AI‑powered marketing platforms and community distribution fuel AI visibility: The Future of AI-Powered Marketing Platforms: Revolutionizing Customer Engagement – nDash.com

Repurpose Strategically

Repurposing your content is one of the most effective ways to amplify your reach across the platforms where both humans and AI models gather information. To do this, convert blog posts into LinkedIn carousels, Reddit threads, YouTube shorts, and other formats that strengthen your authority signals.

LLMs learn from diverse sources such as social networks, forums, and video transcripts to build their understanding of topics. Diversifying your content formats maximizes visibility in these ecosystems and increases your chances of being cited in AI-generated answers.

Why repurposing works:

- Expands your footprint across multiple high-value platforms.

- Creates redundancy that AI interprets as confirmation of authority.

- Engages audiences where they consume content most actively.

How to repurpose for AI-friendly visibility:

- Transform blog posts into engaging LinkedIn carousels to boost engagement among professionals.

- Condense articles into Reddit thread summaries that provide value in community discussions.

- Script content for YouTube shorts to capture audiences in video format while feeding searchable transcripts to LLMs.

- Convert key insights into infographics or PDFs that can circulate across multiple channels.

Example content repurposing workflow:

- Draft core blog post with structured headings and data.

- Extract key points for LinkedIn carousel slides.

- Write a 300-word Reddit thread version with a community-focused tone.

- Create a 60-second YouTube short script summarizing the main takeaway.

Best Practices for Repurposing

- Keep messaging consistent across all formats so AI can connect the mentions.

- Tailor tone and style to match each platform’s audience.

- Monitor which repurposed assets gain the most traction to refine your strategy.

Maintain consistent messaging so AI can link mentions to your brand. Tailor tone for each platform and monitor which assets gain traction.

Prioritize Natural, Helpful Content

Natural content matters because AI detects authenticity and favors sources that feel organic rather than manipulative.

Create it by providing actionable insights, weaving in data naturally, and writing conversationally to engage both users and models.

Why natural content matters:

- AI detects patterns of authenticity, favoring content that feels organic rather than manipulative.

- Helpful insights build trust, increasing the likelihood of citations.

- Human engagement signals (comments, shares, upvotes) reinforce credibility to both models and users.

How to create AI-friendly, authentic content:

- Write conversationally, as if speaking to your audience directly.

- Provide actionable value, such as tips, examples, or expert commentary.

- Avoid forced backlink schemes. AI models recognize unnatural linking patterns.

- Use data naturally, weaving statistics and sources into meaningful context.

Example of natural, helpful content snippet:

Tip: When optimizing for AI search, focus on clarity. Instead of stuffing keywords, answer questions directly. For example, “LLM optimization helps brands gain visibility in AI responses by structuring data for machine consumption.”

Best Practices for Maintaining Authenticity

- Audit your content for fluff and redundancy, and keep only what adds value.

- Incorporate quotes from real experts to strengthen credibility.

- Monitor community feedback to refine tone and ensure your content resonates.

When you prioritize helpfulness over tactics, your content becomes a trusted resource. Trust boosts user engagement and strengthens the likelihood that AI models will surface and cite your content.

With all six steps complete, the next question is how LLMO fits alongside SEO. Are they competing strategies or complementary forces?

Is LLM Optimization Replacing SEO?

No, LLM Optimization doesn’t replace SEO; it complements it by ensuring visibility in AI-generated answers while SEO serves human clicks.

While traditional SEO continues to play a vital role in attracting human visitors who still click through to websites, AI-generated answers are reshaping how users access information. To remain visible, brands must now optimize for both search engines and AI models.

👉 Click here to equip your team with the most effective SEO tools and strategies: SEO Tools for B2B Content Marketing: A Practical Guide – nDash.com

Why Both Strategies Matter

- SEO drives human clicks from organic search results.

- LLMO drives machine citations within AI-generated summaries and chat responses.

- Combined efforts ensure visibility in both traditional and AI-powered search environments.

Key Differences Between SEO and LLMO

- SEO focuses on keyword rankings, backlinks, and on-page optimization.

- LLMO prioritizes structured, authoritative, machine-readable content that AI can easily extract and trust.

- Together, they form a dual visibility strategy for maximum reach.

Example Approach for a Balanced Strategy

- Maintain SEO best practices for human discovery.

- Add structured data and llms.txt to guide AI comprehension.

- Secure third-party mentions to strengthen machine-verifiable authority.

Investing in both SEO and LLMO allows brands to dominate traditional rankings. It also improves their chances of appearing in AI-generated answers that influence how users consume content today.

Ultimately, the two approaches work best together, yet brands must act quickly to align with the AI-driven future.

Final Thoughts on LLM Optimization for AI Visibility

AI-driven search is transforming how people find and trust information. Traditional SEO alone no longer guarantees visibility because users increasingly rely on AI-generated answers instead of clicking through links. LLM Optimization ensures your content remains structured, credible, and accessible to both humans and machines.

Apply the six steps outlined in this guide to strengthen your AI visibility and secure your share of voice in the AI-driven search landscape.

Looking for expert help? nDash connects brands with top-tier writers who create structured, authoritative content that LLMs trust and customers can’t ignore.